AI Takes on the Data Quality Challenge

By embedding AI tools into their systems, vendors seek to enhance various data management processes.

By embedding AI tools into their systems, vendors aim to enhance various data management processes. Image courtesy of Getty.

Latest News

April 21, 2022

The impact of bad data is staggering. A recent Gartner study found that about 40% of enterprise data was inaccurate, incomplete or unavailable. Translate the cost of dirty data into real-world metrics, and the damage is almost incomprehensible.

IBM estimates that bad data costs the U.S. economy $3 trillion each year. Equally harmful, this failure of traditional data management systems hamstrings companies’ innovation efforts and cripples their digitization initiatives.

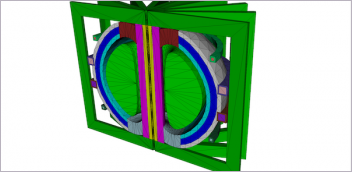

To counter this challenge, software developers—particularly PLM system providers—now turn to machine and deep learning (Fig. 1). By embedding AI tools into their systems, vendors aim to enhance various data management processes, improving accuracy and accelerating the pace at which users can derive and act on data-driven insights. This in turn will boost organizations’ efforts to extract full benefits of digitization strategies like digital threads and digital twins.

Data Challenge

Having a large volume and balanced variety of data is generally welcomed when an organization takes on a project that requires statistical methodologies or empirical modeling. Unfortunately, the same conditions increase potential for data-quality issues.

“Data quality is a paramount necessity in the digital age when building robust digital twins and digital threads,” says Jordan Reynolds, global director of data science at Kalypso. “As volume and variety increase, so do necessities and challenges around data quality. An organization needs a strategy to enhance data quality in a manner that allows digital threads to be created.”

It’s a Dirty Job

The need for a data quality strategy becomes more evident when you review shortcomings of traditional data science practices and tools. Data scientists spend almost 80% of their time wrestling with dirty data, performing mundane tasks like collecting, cleaning and preparing data for analysis, according to expert estimates. Of that 80%, a recent survey by CrowdFlower found that 60% of that time is spent cleaning and organizing data.

Data management systems like PLM platforms increasingly turn to AI technologies to eliminate bad data, enhancing data processing functions such as validation, cleansing and structuring. Image courtesy of Kalypso.

Herein lies the catch: though humans can perform most of the tasks that AI can in this area, AI can do it faster, more consistently and more accurately. This makes AI the prime candidate to automate time-consuming, repetitive tasks, with the goal of greatly increasing efficiency and opening the door for a new class of applications.

Intelligent Automation Solves the Problem

As a result, product lifecycle management (PLM) system developers have begun to recast platforms by empowering them with AI-automated processes. This automation helps PLM systems provide organizations with richer insights into how products have been developed, how decisions have been made in the past, and how those decisions have led to positive or negative outcomes.

“The increased volume and complexity of engineering data authored and imported into PLM systems makes for more robust digital threads and can lead to the development of new digital threads,” says Jason Fults, product manager for Teamcenter at Siemens Digital Industries Software. “For companies to use this data in a digital thread, however, they need to ensure that the data being added is accurate, reliable, complete and organized according to a well-defined classification taxonomy. This has created a real need for AI to help automate much of the preparation, profiling and classification of data.”

Accelerating Data Validation

One area in which AI can be used to enhance data quality is at the validation stage. The goal is to provide clean, relevant data sets for the application at hand, which in the long run will facilitate development of digital threads. AI-driven validation accomplishes this by identifying, removing or flagging inaccurate or anomalous inputs. This process is critical because it tells users what data can be trusted.

The traditional approach to data validation—still widely used today—is to manually apply a set of rule-based techniques to filter data. With the emergence of the IoT and big data, however, the volume of data that PLM systems must process has made manual validation impractical.

“While the traditional approach to data validation works well for smaller datasets with fewer attributes, it’s proven less effective in the era of big data, where datasets are highly dimensional and the volume of data produced is exponential,” says Fults. “AI enables data validation at scale by making it fast, accurate and affordable via automation.”

Most AI-based validation procedures perform one or more checks to ensure that the data meets the desired specifications. These checks test for things such as data type, value range, format and consistency.

A number of machine learning algorithms are used to accelerate the validation process. These enable users to achieve a fuller understanding of the data than traditional methods. One such algorithm is anomaly detection.

“AI can go beyond simple statistics and provide robust approximations of what a normal product should look like,” says Reynolds. “It can therefore identify what abnormalities and data mistakes look like as well. This allows an organization to move away from manual editing and review and go to automatic processes that detect abnormalities and help an organization correct them.”

There are also other machine learning algorithms that engineers can use for validation. These include dimensionality reduction, which eliminates variables that do not influence prediction; association rules, which identify data characteristics that often coexist; and clustering, which groups data based on similarity.

Separating Wheat from the Chaff

Determining data’s relevance and validity for an application is only the first step in the process to provide good data. Next is to identify and eliminate inappropriate, corrupt, inaccurate, inconsistent and incomplete data. This is where data profiling and cleaning come into play.

In the first of a two-step process, data profiling sifts through data sets to determine the data’s quality. This process aims to help organizations effectively extract valuable and actionable insights from data, identifying trends and interrelationships between different data sets.

Data profiling has traditionally consisted of exhaustive processes in which data scientists or IT staff manually calculate statistics like minimum, maximum, count and sum; identify format inconsistencies; and perform cross-column analysis to identify dependencies. This is accomplished by using a combination of formulas and codes to identify basic errors. Unfortunately, this process can take months to accomplish, and even then, data scientists can miss significant errors.

On the other hand, AI-driven data profiling accelerates and improves manual processes by automating much of the heavy lifting. Machine and deep learning algorithms detect data set characteristics (e.g., percentile and frequency) and uncover metadata, including important relationships and functional dependencies.

Rather than replace manual profiling, however, AI-driven processes complement it. This approach leverages AI’s speed and accuracy while taking advantage of humans’ superior ability to parse and understand data nuances.

The Cleansing Process

In the second step, data cleaning aims to remove corrupt, inaccurate or irrelevant inputs. The key difference between profiling and cleansing processes is that profiling identifies errors, and cleansing eliminates or corrects any errors.

Common inaccuracies include missing values, misplaced entries and typographical errors. In some cases, data cleansing must fill in or correct certain values. At other times, values must be removed altogether. Performing these processes manually has become prohibitively time-consuming, making the traditional approach impractical.

In contrast, machine and deep learning algorithms can identify bad data faster than data scientists and automate the cleansing processes with a higher degree of consistency and completeness than traditional methods.

Further, as the cleansing algorithms process more data, the more proficient the algorithms become at the cleansing process, which ultimately will improve performance of systems like PLM platforms.

“Better data cleaning will provide analysts and consumers of the data with better inputs to their systems, which will produce more accurate results and hence more value,” says Kareem Aggour, a principal scientist, digital research group, at GE Research.

AI tools are finding their way into mainstream software systems. “Methods for data cleansing that have long been used in data science communities are now available to mature data management platforms, including PLM,” says Reynolds. “We’ve observed that companies are now beginning to take advantage of them. Often, organizations are dealing with data that is incomplete or incorrect and have to rely on automated data cleansing processes to correct them to make their modeling efforts as accurate as possible.”

Making Sense of Data for Everyone

Once the data set is cleansed, it must be formatted to make it accessible to all potential users. This means bringing heterogenous data from multiple domains and sources, in disparate formats, together and structuring the data in a generally understandable form. The data must, therefore, be placed into context and captured in a semantic model.

A semantic model includes the basic meaning of the data, as well as the relationships that exist among the various elements. This provides for easy development of software applications and data consistency later on when the data is updated.

“We frequently use semantic models to link data of different types across different repositories,” says Aggour. “Semantic models are a popular tool within the AI field of knowledge representation and reasoning, which seeks to develop ways of capturing and structuring knowledge in a form that computers can use to infer new knowledge and make decisions or recommendations. This knowledge-driven approach to structuring and fusing data is gaining significant popularity. Today, we see several academic tools and commercial offerings in this space.”

The central idea is to develop a conceptual model of a domain, using vocabulary familiar to the domain experts and defining the model in a way that reflects how the experts see the domain.

Tools are then developed to map that domain model, or knowledge graph, to one or more physical data storage systems. The map specifies where data defined in the domain model is physically stored.

When users wish to retrieve or analyze data, they specify queries in the domain model, and the AI translates the request into one or more queries against the underlying storage systems. In this way, the AI algorithms serve as layers of abstraction between the data consumers and the data storage infrastructure.

The benefit of this AI-driven data fusion approach is twofold. First, it provides a seamless way for many different types of data to be integrated, such as joining relational data, images and time series data. Second, it allows data scientists to focus their attention on deriving value from the data and not concerning themselves with the management of the data (e.g., where it’s stored and how to query it). The primary drawback of this approach is that the semantic model can be challenging to develop, particularly if the data is highly complex.

AI-Driven Data Quality

Companies increasingly require data quality controls that can operate across large volumes of data and span complex data supply chains. To achieve this, PLM platforms must move beyond traditional data management practices and tools and lean more heavily on data management processes driven by AI technologies such as machine and deep learning.

Bear in mind, however, that these AI-driven processes are very much works in progress. The technology is far from plug and play. There are still challenges to be overcome.

“One of the primary challenges of using AI for data processing is that the AI will inherit the biases of its developers,” says Aggour. “Even developers with good intentions will have unconscious biases that may creep into how an AI algorithm is designed or how it is trained.”

Another challenge arises when an algorithm must differentiate between an error and a genuine anomaly. This becomes particularly relevant with time-series sensor data for assets that run for months at a time. In these cases, it’s not always obvious to the AI algorithm when it has detected a bad data point, the beginning of a change in the range of normal data, or a rare black swan event.

“We often see two scenarios in which the data may appear to need cleaning,” says Aggour. “In one instance, a sensor can go bad and start to report invalid readings. In another scenario, the equipment performance can start to degrade, in which case the sensor readings may deviate from past trends. In the latter instance, the data is accurate and should be kept as is, whereas in the former, the data is bad and requires cleaning. Separating the signal from the noise can be a real challenge, particularly when dealing with very large volumes of data, where the data may evolve over some axis, such as time.”

The reality is that AI is only as smart as its inputs and training, and there are still opportunities to improve AI-driven data-quality controls. That said, benefits delivered by the technology ensure its future role in the development of data threads and data twins.

“While AI isn’t a silver bullet, the combination of man and machine can improve performance, and form a partnership greater than the sum of its parts when used correctly,” says Brian Schanen, manager of the global PLM/PDM readiness program at Autodesk.

More Autodesk Coverage

More Siemens Digital Industries Software Coverage

Subscribe to our FREE magazine, FREE email newsletters or both!

Latest News